As artificial intelligence (AI) continues disrupting industries and the way we do business and increasingly takes over strategic decision-making within organizations, the importance of its responsible use has become a higher priority than ever. While AI technologies offer various upsides, the risk of developing and using ethically questionable AI tools is a concern for organizations. These concerns emphasize the need for "Responsible AI" as a guiding principle to ensure that organizations leverage AI capabilities while aligning with their ethical values and avoiding any detrimental impacts on civil life.

This article focuses on various aspects of Responsible AI, aiming to unpack its essence, underline its importance in today’s tech-driven landscape, and address the principles involved in creating responsible AI systems.

What is it?

Responsible AI refers to a framework for designing, developing and deploying AI systems that proactively mitigate risks related to ethical considerations and ensure AI systems act in the best interest of humanity. PwC identifies potential AI risks in six different categories: performance, security, control, economic, societal and ethical.

Why is it important to have a responsible AI guideline in the age of artificial intelligence?

An organization lacking a responsible AI framework exposes itself to potential risks stemming from artificial intelligence. Understanding the importance of implementing a responsible AI guideline for organizations can be best grasped by examining the consequences of not having one, as seen in past examples:

One of the most notorious instances of unethical AI-powered analytics misuse is the Facebook-Cambridge Analytica data scandal. Meta Platforms paid $725 million to settle a private class-action lawsuit in December 2022 due to improper user data sharing.

Amazon's machine learning recruiting tool aimed to shorten the resume screening process but was later found to exhibit bias against women candidates. The tool learned decision-making from historical data already tainted by human biases. Amazon insiders revealed that the AI system underwent trials only and was never used by Amazon recruiters to assess candidates.

Another area of regulatory contention regarding artificial intelligence is remote biometric identification technology, commonly known as facial recognition. The European Data Protection Board (EDPB) advocates for a stricter regulatory approach due to the high risk of intruding into individuals' private lives. Additionally, researchers highlight that facial recognition algorithms exhibit varying error rates across different demographics. According to research, these algorithms achieve their highest accuracy with lighter-skinned males, while accuracy may decrease by up to 34% for darker-skinned females.

AI remains relatively new in the eyes of consumers, and coupled with these examples of AI failures, consumers expect businesses to develop AI systems more responsibly. Therefore, implementing a responsible AI framework is a crucial strategic decision for organizations to expedite their artificial intelligence journey.

What are fundamental principles of building an ethical AI system?

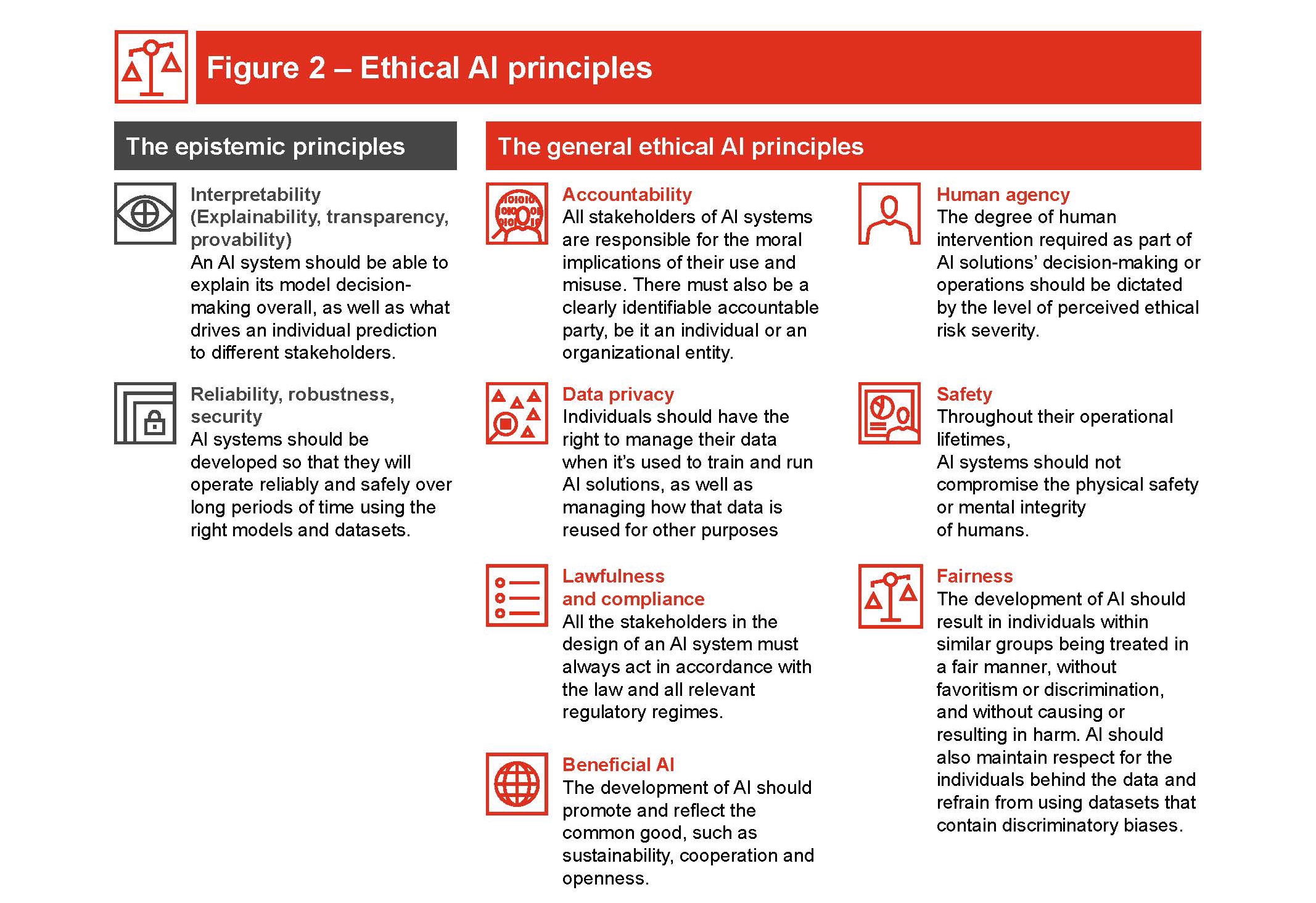

Ethical AI system principles can be investigated in two categories; epistemic principles and general ethical AI principles. PwC identifies these principles as follows:

Epistemic principles

Transparency & Explainability: AI systems are trained using historical datasets to make data-driven decisions. However, the robustness of AI outputs may be compromised due to factors such as bias or low-quality data. To ensure responsible AI, it is essential to promote openness and confidence in decision-making by utilizing explainable AI technologies. These technologies provide meta-information explaining "why" and "how" a choice was made and indicate "what" features most significantly influenced that decision.

Robustness & Reliability: According to PwC’s study, concerns with the reliability and robustness of AI applications' performance and output over time are the top ethical challenges organizations are facing. A responsible AI framework enables businesses to identify potential output-wise weaknesses in models and keep track of long-term performance.

Security: Artificial intelligence systems have the potential to be hacked. This is a significant concern that has to be extensively investigated and validated before these technologies are widely used in business applications. The compromise of AI systems may result in disastrous losses for organizations.

General ethical AI principles

Accountability: All stakeholders involved with AI systems, from development to marketing, should understand their responsibility towards the general public and be held accountable for any unintended consequences of AI output. This principle gains extra attention, particularly in healthcare-related AI use cases where unintended consequences can be life-threatening.

Bias and Fairness: One of the biggest technical challenges of building an ethical AI system is tackling bias and fairness in datasets and algorithms used to build the system. It is not easy to tackle bias and fairness problems because there is no straightforward solution to tackle these challenges. Firstly, biases exist in humans, and since datasets and algorithms are designed by humans, it is almost impossible to eliminate biases. Also, another reason why it is hard to build a fair AI system is that there are more than 20 different definitions of fair mathematically. So, if you design your AI system based on one of the definitions, the fairness of the system will not be aligned with the rest of the definitions.

Data Privacy/ Lawfulness/ Compliance: As AI systems process vast amounts of personal data, they must comply with regulations such as GDPR or CCPA. Protecting privacy becomes crucial, along with adhering to privacy regulations specific to various industries. Therefore, AI systems must fully align with industry regulations.

AI Safety/ Beneficial AI: AI systems should prioritize concerns regarding potential misuse, harmful consequences, or accidents that could jeopardize ecosystems. Promoting the common good should serve as the motivation for AI researchers and developers. In essence, businesses must aim to make AI systems moral and beneficial. As observed in the table above, organizations lack confidence in their ability to detect and shut down malfunctioning AI systems promptly, suggesting that preventive solutions may be preferable to protective solutions.

Human Agency: AI systems should not undermine human autonomy or cause adverse effects, based on risk factors associated with specific use cases. Human control can be facilitated through AI governance mechanisms such as a human-in-the-loop (HITL), human-on-the-loop (HOTL), or human-in-command (HIC) approach.